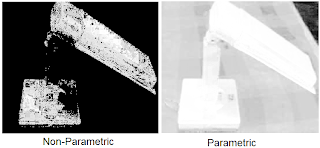

Our goal is to extract the acceleration of the object along the incline. First, we need to segregate the region of interest (ROI) from the background. From our previous lessons, we have two choices of image segmentation, color and binary. Since the video does not really contain important color information, I chose to perform binary image segmentation. The crucial step in this method is the choice of the threshold value. For this activity I found that a threshold value of 0.6 produced good results. However, the a good segmentation was still not achieved since the reflection of light in some parts of the image caused the color of the ramp to be unequal. A gif image of the result after thresholding is shown below:

Clearly, this is not what we want so I performed opening operation using a 10x5 structuring element both to further segregate the ROI from the background of the image and to make the edges of the ROI smooth. The resulting set of images is shown below:

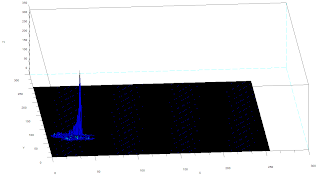

Now we can track the position of the ROI in time. The position over time in pixels can be obtained from the average value of the x pixel position per image. Distance per unit time where distance is in pixels and time is in frames. The conversion factor we obtained is 2mm/pixel and from the video the frame rate is 24.5fps. After conversion I was able to obtain 0.5428m/sec^2.

Analytically, from Tipler, the acceleration along an inclined plane of a hollow cylinder is given by a = (g*sin(theta))/2, where theta is the angle of the incline, for our case theta = 6degrees. Using the formula we get 0.5121m/sec^2. This translates to roughly 5.66% of error. The scilab code I used is given below:

------------------------------------------------------------------------------

chdir("C:\Users\RAFAEL JACULBIA\Documents\subjects\186\activity17\hollow");

I = [];

x = [];

y = [];

vs=[];

ys = [];

xs = [];

as=[];

se = ones(10,5);

se2 = im2bw(se2,0.5);

for i = 6:31

I = imread("hollow" + string(i-1) + ".jpg");

I = im2bw(I,0.6);

I = dilate(erode(I,se),se);

imwrite(I , string(i)+ ".png" );

I = imread( string(i) + ".png");

[x,y] = find(I==1);

xs(i) = mean(x);

ys(i) = mean(y);

end

ys = ys*2*10**(-3);

vs = (ys(2:25)-ys(1:24))*24.5;

as = (vs(2:24)-vs(1:23))*24.5;

-------------------------------------------------------------

I grade myself 9/10 for this activity for not getting a fairly high error percentage.

Collaborators:

Ed David

JM Presto

The average value of the difference is 0.359 with standard deviation of 0.318. This translates to an error of roughly 1%.

The average value of the difference is 0.359 with standard deviation of 0.318. This translates to an error of roughly 1%.